Local LLM Deployment for Documents: Achieving Security, Control, and Compliance in AI

Across industries, from finance and legal to healthcare and manufacturing, organizations are amazed by the capabilities of Large Language Models (LLMs). These models can read, understand, and reason over documents with near-human intelligence – extracting key data, classifying content, summarizing complex reports, and even identifying inconsistencies or risks within massive datasets.

However, as impressive as cloud-based AI may be, the biggest obstacle remains security. Most enterprises simply cannot risk sending confidential contracts, invoices, HR records, or compliance files to an external cloud service. Uploading sensitive or regulated data exposes organizations to data leakage, privacy violations, and compliance breaches.

And even beyond security, practicality becomes a major challenge. Constantly transferring terabytes of documents to a cloud LLM is inefficient, costly, and often impossible for real-time analysis. A true document-intelligent system must operate continuously, in the same controlled environment where the data resides with direct access to all related files and databases. Sending random, isolated files to a remote AI engine simply cannot deliver comprehensive, context-aware analysis.

That’s why more and more enterprises are shifting toward local deployment of LLMs. Running an LLM on-premise or in a private cloud allows the organization to keep every document securely stored within its own infrastructure, while giving the AI full, controlled access to perform deep analysis, search, extraction, and understanding.

Deploying LLMs locally brings the best of both worlds:

- The intelligence and automation power of AI, and

- The data protection, governance, and compliance that enterprises require.

This article explores how to deploy LLMs locally for document processing – security principles, and practical steps that make it the secure path to AI.

⚙️ Why Local LLMs for Documents?

Today, almost every organization wants to harness the power of Generative AI – the ability to read, understand, and analyze documents at scale. Yet, most enterprises face the same roadblock: security and data exposure. Sending confidential contracts, invoices, HR files, or legal documents to a third-party cloud-based LLM introduces serious risks. Sensitive information could be exposed, mishandled, or fall outside regulatory boundaries. For industries under strict compliance like finance, government, healthcare, or law – this is simply not acceptable.

Deploying LLMs locally, either on-premise or within your private cloud (VPC), eliminates these risks and gives organizations the control they need.

Here’s how local deployment bridges the gap between AI innovation and enterprise-grade security:

🔒 Your documents never leave your infrastructure

All data remains inside your secured environment. The LLM operates within your network perimeter, ensuring no document or metadata is ever transmitted externally.

🧭 Full control over access, logging, and compliance

Integrate with your existing identity systems (SSO, Active Directory, etc.), apply granular role-based permissions, and maintain complete audit trails of every user interaction. Every query and every document access is logged and traceable.

⚙️ Tailored AI to your own data and workflows

Local deployment lets you fine-tune and adapt the LLM to your document types, formats, and business processes. You can customize prompts, extraction patterns, and workflows that align precisely with your operations — something a generic public model can never provide.

🧩 Seamless integration with internal systems

Because the model runs within your infrastructure, it can directly access connected systems like ERP, CRM, or shared drives. This enables more comprehensive and contextual analysis, where the LLM can understand relationships across multiple documents in real time.

💡 Consistent, secure, and always-available AI

Unlike public APIs that depend on external connectivity, a local LLM runs continuously inside your network. It can handle documents instantly, even in disconnected or air-gapped environments, providing full operational independence.

🧱 High-Level Architecture: LLM Behind the Firewall

At elDoc, we believe that true AI transformation must go hand-in-hand with data security, control, and compliance. That’s why our architecture is designed around a simple but powerful principle:

Bring the intelligence to your data, not your data to the intelligence.

Whether deployed on a secure server, within a private cloud, or even on a local workstation, elDoc’s architecture ensures that your documents never leave your environment, while the LLM operates securely behind your firewall. Even smaller, optimized LLMs can run directly on a powerful local laptop or desktop, allowing users to process and analyze documents offline or in restricted networks. For enterprise-scale workloads, the same architecture scales seamlessly across GPU clusters or private VPC environments maintaining identical data protection principles.

This architecture, built around elDoc, is designed to deliver security, scalability, and full LLM readiness for intelligent document processing. At its core, elDoc orchestrates a seamlessly integrated environment where MongoDB, a Vector Database, and a locally deployed LLM work in unison to provide real-time document understanding, workflow automation, and regulatory compliance – all operating securely within your own infrastructure. With elDoc at the center, every layer of the architecture from data storage to AI reasoning – is optimized for privacy, performance, and interoperability. MongoDB ensures high-performance document and metadata storage; the Vector Database powers semantic search and Retrieval-Augmented Generation (RAG); while the local LLM enables advanced document comprehension, classification, and cross-referencing without exposing any data to external systems.

The result is a self-contained, enterprise-grade AI ecosystem that keeps your data private while empowering your teams with the intelligence of Generative AI – all within the trusted, compliant, and fully controlled elDoc environment.

How elDoc’s Architecture Brings LLM Intelligence Securely to Your Documents

📥 1. Document Ingestion & Normalization

The journey begins with capturing, cleaning, and preparing your document data. In elDoc, you can upload and process virtually any type of file from highly structured forms to completely unstructured text documents – all within a unified and intelligent pipeline.

Supported Inputs:

- 📄 Structured documents: bank statements, invoices, utility bills, receipts, purchase orders, spreadsheets.

- 🧾 Semi-structured documents: reports, applications, internal forms.

- 📚 Unstructured documents: contracts, policies, guidelines, procedures, price books, and correspondence.

- 🖼️ Images and scans: photos or scanned copies of documents in any format.

Processing Steps:

- 🧠 Computer Vision Preprocessing – elDoc applies intelligent vision correction to normalize scans before OCR. This includes fixing image artefacts, adjusting brightness, correcting rotation or skew, and removing background noise to ensure optimal text recognition.

- 🔤 Optical Character Recognition (OCR) – Recognizes and converts printed or handwritten text into machine-readable form, ensuring every document even a scanned or photographed one becomes fully searchable and analyzable.

- 🧩 Text Extraction & Structural Layout Analysis – elDoc intelligently identifies document sections, tables, paragraphs, preserving context and visual relationships for accurate understanding.

- 🗂️ Data Parsing & Metadata Capture – Extracts relevant data points such as dates, vendors, document types, invoice IDs, reference numbers, and any other business-specific attributes.

Purpose:

Transforms chaotic, mixed-format documents into clean, structured, and machine-readable text, perfectly ready for advanced AI reasoning, classification, and analysis.

📌 Example: Upload a scanned invoice or a lengthy contract elDoc automatically enhances the image, performs OCR, extracts critical fields, and normalizes the content for instant search, indexing, and intelligent analysis.

🗄️ 2. Document Storage – Powered by MongoDB

Once processed, all structured and unstructured document data is securely stored within your local infrastructure using MongoDB, ensuring the perfect balance between performance, flexibility, and protection. MongoDB acts as the central data backbone of elDoc – a high-performance, schema-flexible database that adapts effortlessly to a wide variety of document formats and metadata structures.

Why MongoDB?

- 🧩 Flexible Schema Design: MongoDB’s document-based architecture perfectly accommodates variable and evolving document structures from invoices and contracts to correspondence, forms, and reports without the need for rigid templates.

- ⚡ High Performance & Real-Time Access: Optimized for fast query execution, it supports instant retrieval of documents and metadata for search, validation, and AI workflows, even under heavy enterprise workloads.

- 🔐 Enterprise-Grade Security: Built-in encryption at rest, field-level security, and TLS protection ensure data confidentiality. MongoDB integrates seamlessly with your existing IAM and SSO systems, maintaining a unified access control policy.

- 🧱 Scalability & Reliability: Its distributed, horizontally scalable design allows organizations to handle millions of documents efficiently – essential for large-scale intelligent processing.

Access Control & Data Governance:

The LLM never directly interacts with the raw database. Instead, elDoc manages communication through secure, audited APIs that enforce zero-trust principles, ensuring that only authorized processes can query or modify data. Every access event is logged and traceable, providing complete visibility for compliance and audit teams.

📌 Outcome:

All your documents remain encrypted, structured, and instantly accessible for AI-driven workflows whether for search, extraction, or analysis without ever leaving your secured perimeter. MongoDB ensures that elDoc delivers both the speed of innovation and the confidence of enterprise-grade data protection.

🔍 3. Indexing & Vector Database (RAG Layer)

To enable truly intelligent, context-aware document understanding, elDoc integrates a powerful Vector Database as part of its core architecture. This component forms the foundation of Retrieval-Augmented Generation (RAG) – a hybrid approach that combines semantic retrieval with LLM reasoning, ensuring that every AI-generated answer is accurate, explainable, and grounded in your organization’s own data.

🧩 How It Works

After documents are processed and normalized, elDoc performs semantic indexing, converting unstructured text into meaningful data representations.

- Chunking for Context

Each document is intelligently divided into smaller, context-rich segments or “chunks” — such as paragraphs, clauses, or table entries. This preserves the logical flow of the document while enabling more precise search and retrieval. - Embedding Generation

For each chunk, elDoc generates a numerical vector — called an embedding — that represents the semantic meaning of the text, not just its keywords. This means the system understands that “termination clause” and “contract cancellation terms” refer to the same concept, even if phrased differently. - Metadata Enrichment

Each embedding is stored together with relevant metadata — such as document type, department, date, vendor, contract value, or category — enabling multi-dimensional filtering and contextual retrieval. - Storage in Vector Database

These embeddings are securely stored inside the Vector Database, which is fully integrated with elDoc’s access controls and MongoDB backend. The database is optimized for semantic similarity search, enabling real-time queries over millions of indexed document fragments.

🧠 Purpose & Functionality

This design allows the LLM to access only the most relevant, trusted, and up-to-date information when generating a response or performing analysis. Instead of “guessing” from its general training, the LLM consults your private vector knowledge base, ensuring that every insight is based on your own verified documents.

When a user submits a query — for example,

“Show me all vendor contracts with renewal terms longer than one year,”

elDoc performs a semantic search across the vector embeddings. It identifies relevant document sections (even if the text doesn’t use the exact phrasing), retrieves the top matches, and feeds them into the LLM as context for response generation.

This ensures that every output is:

- 📚 Contextually Accurate — Based on verified internal sources, not generic internet data.

- 🔒 Secure — Processed entirely within your infrastructure, with no external API calls.

- ⚙️ Traceable — Each LLM answer can be traced back to its original document segment, supporting audit and validation.

⚡ Key Advantages

- Semantic Understanding: Goes beyond keyword search by recognizing meaning, relationships, and intent within documents.

- Cross-Document Intelligence: Enables elDoc to analyze and correlate information across thousands of files such as linking invoices to contracts or identifying missing compliance records.

- Scalability: The vector database efficiently handles millions of embeddings, allowing real-time responses even in large enterprise document ecosystems.

- Compliance and Auditability: Every search and retrieval action is logged, ensuring full transparency and accountability.

With elDoc’s RAG-driven Vector Database, your AI no longer searches the web – it searches your enterprise knowledge. Every answer is context-rich, confidential, and fully compliant, enabling you to trust AI outputs as much as your own documentation.

🧠 4. Local LLM Runtime – Your Private AI Engine

At the core of elDoc’s architecture lies its Local LLM Runtime – the intelligent engine that powers understanding, reasoning, and automation across all your documents. Unlike traditional AI solutions that depend on external APIs or third-party cloud services, elDoc enables complete on-premise deployment, ensuring that all document intelligence remains securely within your infrastructure.

⚙️ Flexible Deployment Options

elDoc is designed to adapt to any organizational environment, from small secure setups to large enterprise data centers:

- 💻 Local Laptop or Workstation: Ideal for confidential or air-gapped environments where users need to analyze documents without internet connectivity.

- 🖥️ On-Prem GPU/CPU Servers: Perfect for departmental or enterprise workloads where scalability, parallel processing, and continuous AI operations are required.

- ☁️ Private Cloud or VPC Deployments: For organizations leveraging their own cloud infrastructure, elDoc supports full LLM orchestration inside a Virtual Private Cloud (VPC) — maintaining data residency and compliance while offering dynamic scalability.

🧩 Architecture & Integration

The LLM is self-hosted and fully optimized in elDoc for document intelligence tasks such as extraction, summarization, classification, anomaly detection, and risk assessment. It integrates seamlessly with elDoc’s core platform and data layers through secure internal APIs:

/generate— for intelligent text generation, document summarization, and report drafting./chat— for conversational Q&A, interactive document exploration, and context-driven search across internal data./extract_fields— for automated extraction of structured data, validation of key fields, and consistency checks across multiple documents./index— for automated indexing and tagging of documents, linking content to metadata and making it instantly searchable through the RAG layer./classify— for intelligent document categorization based on content, type, or business logic (e.g., invoices, contracts, financial statements, HR forms)./rename— for AI-driven document renaming according to custom patterns such as vendor, date, project, or classification type./analyze— for deep document analysis, pattern recognition, discrepancy detection, and risk identification across large datasets.

All connections operate under strict zero-trust principles — fully isolated from external networks, with no outbound data transmission to third-party AI providers.

🧠 Support for Multiple, Cost-Efficient LLM Models

elDoc is model-agnostic and built to support a wide range of cost-efficient, locally deployable LLMs. Organizations can choose or integrate the model that best fits their operational, financial, and infrastructure requirements.

- elDoc supports open-source and commercial LLMs optimized for on-premise deployment, including lightweight models that can run on standard CPU infrastructure and high-performance options that leverage GPUs for advanced workloads.

- This flexibility enables clients to balance accuracy, speed, and cost, deploying the right model for each use case — from small internal analysis tasks to large-scale enterprise document processing.

- elDoc’s modular design also allows model updates, fine-tuning, or replacement without affecting the rest of the system, ensuring long-term adaptability as AI technology evolves.

🔒 Security and Independence

Because all inference and reasoning take place within your private environment, sensitive document data never leaves your organization. This guarantees full data sovereignty, compliance, and auditability, while eliminating risks associated with cloud-based AI processing.

With elDoc, every AI operation — from reading and analyzing contracts to classifying invoices or generating reports — is performed by your own private LLM. You retain total control, transparency, and independence, while benefiting from the efficiency, scalability, and cost-optimization of a flexible, local AI architecture.

💼 5. Application Layer – elDoc Document Intelligence

The Application Layer is where users experience the true power of elDoc’s intelligent document ecosystem — an intuitive, multilingual, and feature-rich interface that connects people, documents, and AI within a single, secure environment.

🧭 User-Friendly, Multilingual Experience

elDoc is designed for accessibility and simplicity, ensuring that even complex AI operations are effortless for end users.

- 🌍 The platform interface currently supports multiple languages — English, Spanish, Chinese, and Ukrainian — making it globally adaptable and suitable for multinational teams.

- 🪄 A clean, modern, and responsive design allows users to interact naturally with documents: to ask questions, search, extract data, or automate workflows — all with just a few clicks, no technical knowledge required.

Users work entirely through a unified, web-based interface, seamlessly connected to the underlying AI, storage, and workflow layers.

⚙️ Integrated Functional Modules

elDoc goes far beyond traditional document management systems — it’s an end-to-end intelligent automation platform with a modular architecture. Each module is tightly integrated with the LLM engine and security framework, enabling full control and flexibility.

- ✍️ eSignature: Enables secure electronic document signing, approval routing, and audit tracking with legally compliant, time-stamped records.

- 🤝 File Collaboration: Multiple users can collaborate in real time — viewing, annotating, commenting, or editing shared documents.

- 🔒 Secure File Sharing: Share documents externally with advanced security controls such as OTP authentication, password protection, and link expiration.

- 🕒 Version Control: Automatically maintains historical versions of documents, allowing users to view, compare, or restore earlier iterations.

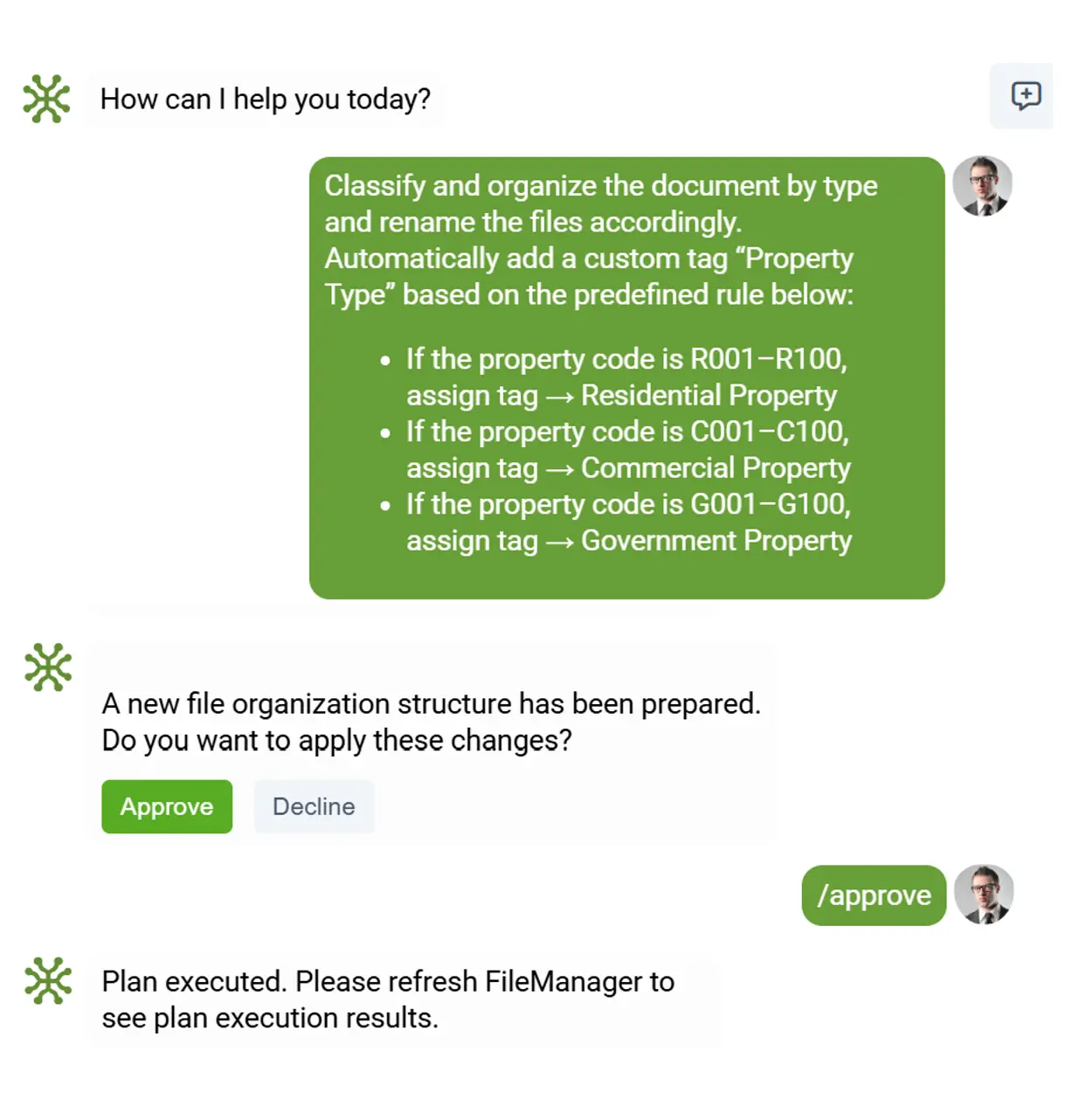

- 🧠 Intelligent Document Processing: Supports AI-powered classification, extraction, indexing, and document renaming directly from the interface.

- 🔄 Workflow Automation (No-Code): Users can easily build and automate business processes without writing a single line of code from invoice approval chains to contract review flows, enabling seamless automation across departments.

Each module operates within the same secured environment and can be customized to align with internal business rules, compliance standards, or regional requirements.

🤖 AI-Powered End-User Applications

Within the elDoc interface, users can directly interact with the LLM and AI components to perform a wide range of document intelligence tasks:

- 🔍 Natural-Language Document Search & Chat: Ask complex questions across millions of files — the LLM instantly provides context-aware answers grounded in your own documents.

- 📊 Automated Data Extraction & Validation: Extract key values from invoices, contracts, reports, or statements with high accuracy and automatic validation rules.

- 🗂️ Document Classification & Renaming: Automatically categorize, tag, and rename files by type, vendor, year, or department using AI-driven logic.

- ⚠️ Risk Analysis & Anomaly Detection: Identify discrepancies, missing documents, or irregular data patterns across related files (e.g., invoices vs. contracts).

All these operations are powered by elDoc’s on-premise AI runtime and RAG-based document intelligence, ensuring that every search or action remains secure, traceable, and compliant.

🧰 Administrative Tools & Integration Layer in elDoc

For administrators, elDoc provides a powerful control center to manage configurations, permissions, and integrations:

- A centralized dashboard for monitoring workflows, document activities, and system health.

- Comprehensive audit logs that track every document access, and workflow event.

- Integration APIs that connect elDoc to core enterprise systems such as ERP, AP, CRM, ensuring smooth data flow across the organization.

- Supports data export in multiple formats (JSON, CSV) for downstream analytics, reporting, or external system synchronization.

Security & Governance Layer — Complete Control, Compliance, and Data Protection

In elDoc, security and governance are not features — they are the foundation. Every component, from the database to the AI layer, is built around zero-trust principles, ensuring that users, systems, and AI models only access what they are explicitly authorized to. As organizations adopt AI for document processing, one of the most critical challenges is preventing unauthorized information access. Not every employee, department, or AI prompt should be able to query or retrieve data they are not permitted to view. elDoc solves this through granular permission control, role-based governance, and multi-layer security enforcement across the entire platform.

🧩 Defense-in-Depth Security Model

elDoc implements multiple, interlocking security layers designed to protect your data at every stage — from ingestion and storage to AI inference and sharing:

- Application-Level Security

- All users authenticate through Single Sign-On (SSO) or directory-based identity management systems (e.g., Azure AD, Okta).

- Multi-Factor Authentication (MFA) adds an additional layer of verification, protecting against unauthorized logins.

- One-Time Passcode (OTP) access can be enforced for critical operations or external file sharing.

- Role-Based Access Control (RBAC)

- Each user is assigned specific roles — such as Viewer, Editor, Approver, Administrator, or AI Analyst — defining exactly what actions they can perform within the system.

- Permissions extend down to individual actions (view, edit, download, delete, share, or sign).

- Administrators can create custom roles and permission groups, tailoring security policies to departmental or compliance needs.

- AI Access Governance

- elDoc introduces AI-level permission controls, ensuring that the LLM can only retrieve and analyze documents the user is authorized to access.

- This prevents “AI overexposure” — where someone might try to ask the model about restricted data (e.g., HR, legal, or financial information).

- All AI queries are filtered through the user’s access profile, so the model’s retrieval process respects the same visibility rules as the underlying data layer.

- Data Protection & Encryption

- All documents and metadata are encrypted at rest using MongoDB’s native encryption engine and encrypted in transit via TLS 1.3.

- elDoc supports field-level encryption for sensitive data (e.g., PII, payment details, client IDs).

- Secure key management ensures encryption keys remain under client control — never exposed to external systems.

- Network Security & Isolation

- The entire platform can operate fully offline or within a segmented internal network, ensuring no external data transmission.

- Internal APIs communicate through secure, authenticated channels.

- API gateways enforce firewall rules, throttling, and certificate-based authentication.

- Monitoring, Auditing & Compliance

- elDoc provides real-time monitoring dashboards showing user activities, AI interactions, and system performance.

- Every access, change, and AI query is logged with full audit metadata (user ID, document ID, timestamp, action type).

Bring AI / LLM to Your Documents – Securely and Locally with elDoc

Adopting AI and LLM technologies on-premise is not just a technical choice — it’s the most secure and future-ready way to benefit from modern AI capabilities while keeping full control of your data. Building such an architecture from scratch could take years of research, development, and integration.

elDoc accelerates this journey with a proven, production-ready platform that has evolved through years of practical deployments across multiple industries, business functions, and countries. Every component of elDoc — from intelligent document processing and workflow automation to eSignature, collaboration, and local LLM integration — reflects real-world experience and enterprise-grade reliability.

By adopting elDoc, organizations gain immediate access to LLM-powered document intelligence, workflow automation, and secure data governance, all within a unified on-premise or private-cloud environment. It’s not just adopting AI — it’s adopting AI with purpose, security, and experience built-in.

elDoc brings together everything you need to manage, understand, and automate your documents — powered by LLM, designed for security, and proven in practice.

Let's get in touch

Get your free elDoc Community Version - deploy your preferred LLM locally

Get your questions answered or schedule a demo to see our solution in action — just drop us a message